Things I did

Grass

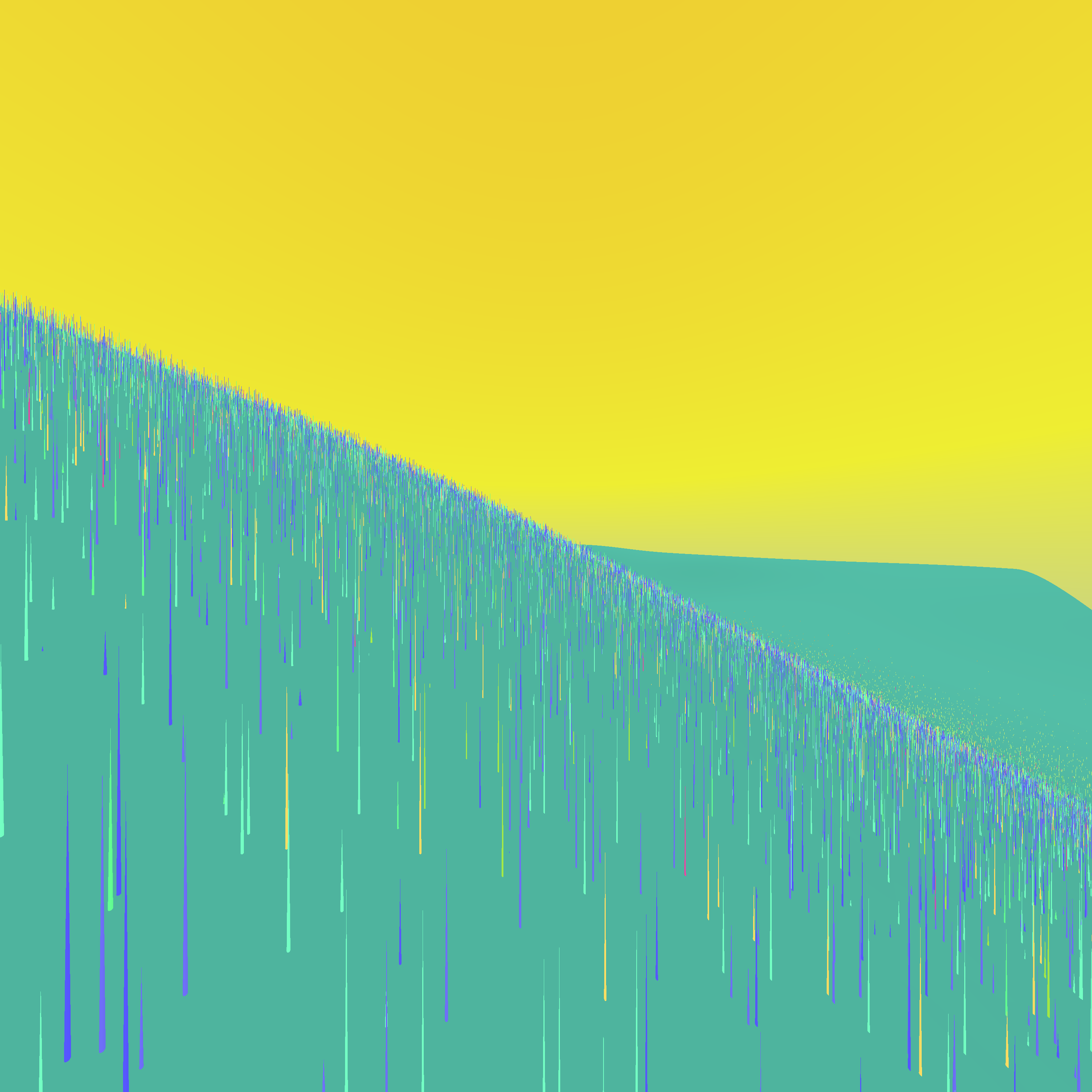

Updated look of "grass", it looked darker than it should due due to not enough lightning, this lightning was due to the fact that I use point illumination.

So I added "ambient" property to the grass, this magically makes it illuminated even if there is no light source.

Long term I'll need to give more thought about lightning --- somehow simulate that during the day atmosphere itself emits some light (diffracts light from the sun anyways).

Result

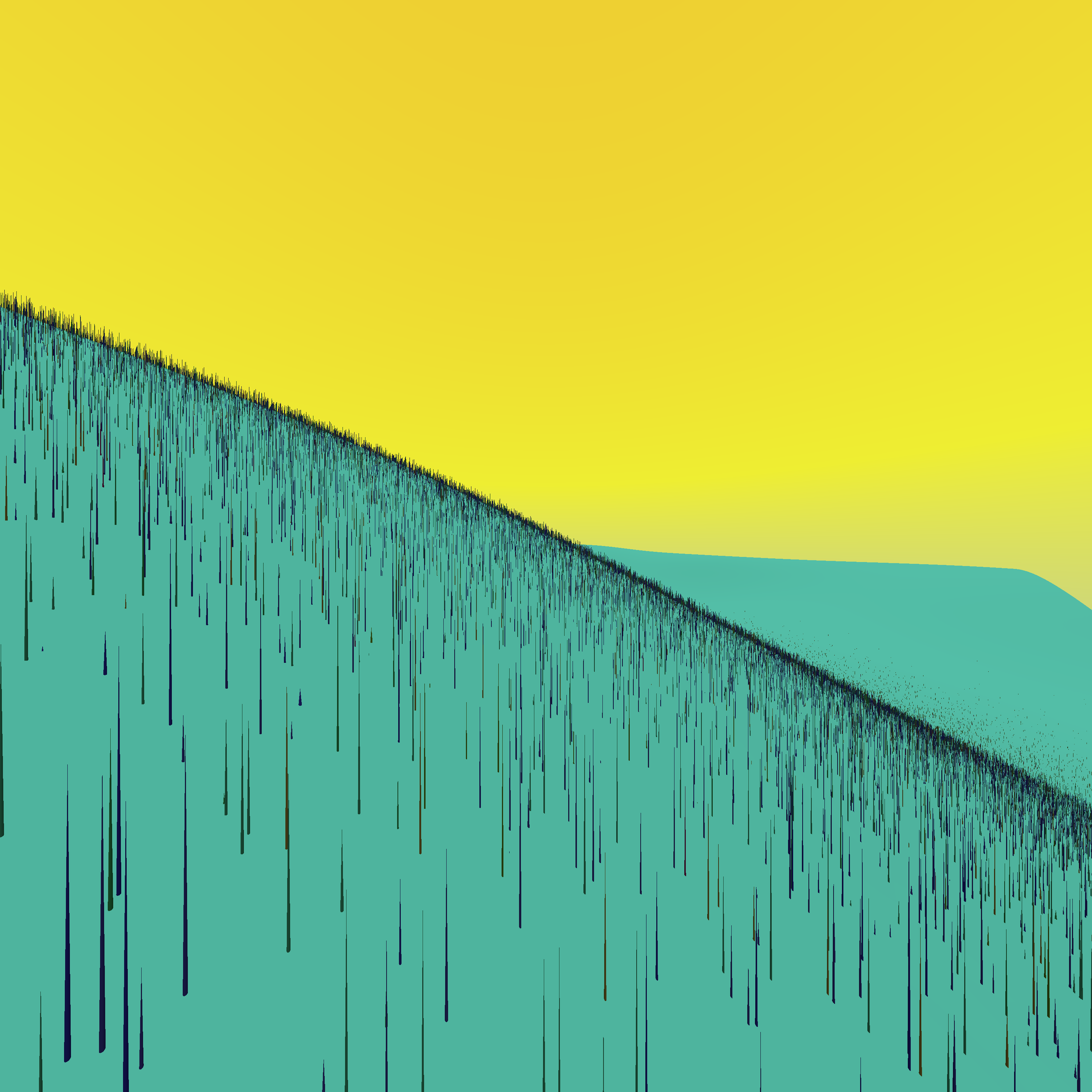

Before

After

Technical details

Previously blade of grass was looking roughly like:

cone {

# Coordinates:

<0.11751987765853868, 0.33079267650261673, 0.09734413318477975>

1e-05

<0.11751987765853868, 0.331318350241197, 0.09734413318477975>

1e-08

# Color:

pigment {

rgb<0.30000000000000016, 0.3000000000000006, 0.9999999999999999>

}

no_shadow

}

Now it looks like:

cone {

# Coordinates:

<0.11751987765853868, 0.33079267650261673, 0.09734413318477975>

1e-05

<0.11751987765853868, 0.331318350241197, 0.09734413318477975>

1e-08

# Color:

texture {

pigment {

rgb<0.30000000000000016, 0.3000000000000006, 0.9999999999999999>

}

finish {

ambient "White"

}

}

no_shadow

}

Multi-processing file generation

After adding the ambient property, .pov files grew significantly, also so did runtime of the python part. Since program had no significant, easy to remove hot-spots, I decided to just parralelize it.

Generating of a single .pov file is inherently single-threaded (well I could try to parallelize that but it would be vert tricky).

So I decided to parallelize the python part (povray is parralelized enough already so it needs to be called sequentially).

Misc

Fiddled code profiling and (unsuprisingly) profile told me that, python part of the code spends most of the time:

- Formatting the text files;

- Generating random numbers;

Looked at couple of hot spots and shaved couple of seconds from execution time. Then I decided that this does not make sense.

Plans

Use object referencing

Try to decrease the size of .pov file. This should do two thigs:

- Decrease the time of the python part of the program considerably (less stuff to write to disk)

- Hopefully decrease the time for povray to load the file, and maybe trigger some faster rendering paths.

Right now each blade of grass (and really: each randomly generated object) is copied each time it is placed, so right now I have 50 megs of (see below) at the end, most of these differ only by coordinates (and coordinates themselves are similar).

cone {

# Coordinates:

<0.11751987765853868, 0.33079267650261673, 0.09734413318477975>

1e-05

<0.11751987765853868, 0.331318350241197, 0.09734413318477975>

1e-08

# Color:

texture {

pigment {

rgb<0.30000000000000016, 0.3000000000000006, 0.9999999999999999>

}

finish {

ambient "White"

}

}

no_shadow

}

However povray allows me to:

- Declare the object;

- Then reference it inside the file and transform it;

So I could:

- Declare "Canonical" blade of grass for each patch of grass;

- Then just reference it translating it to appropriate position;

Texture the height field

I can't put much more grass into the files, not without loosing too much development velocity (I'd like to see updated results after couple of minutes not hours).

However distant parts of the landscape look, well bare.

So the idea is to generate very big texture file, that would simulate patches of grass using just color, and hope nobody notices in the distance ;)

Play with povray quality knob

Apparently there is a "quality" flag for povray, need to fiddle with it.

Things that did not work as planned

- Had to re-write this "multiprocessing" part three times, problem "do first step in multi processed way, and then run second step sequentially" is tricky and not covered by the stdlib;

- Tried to play with diffuse lightning (radiosity setting). It made renders take order of magnitude longer, and they didn't look super. Maybe next time.